Are AI-driven B2B marketing and sales solutions truly the magic wand they're made out to be? What about AI ethics? In my perspective, communicating your AI Ethics Standards will become an impactful competitive advantage very soon.

Before we dive into this article, let me set the right context. This is not a definitive guide on AI Ethics. I'm not a researcher or hard-core developer. First and foremost, I'm an enthusiast for using AI in a business context.

This post reflects my personal perspectives and is meant to spark conversations on AI Ethics - nothing more or less. I'm looking to give my insights and views on a topic that needs more attention from the broad masses. Make out of it what you want. Caveat: I have used GPT4 to streamline the writing in parts of this article.

I'm helping companies leverage AI tools to stay ahead of the competition and yet, we have to navigate the ethical landscape to not create costly repercussions. It's easy to create 10-20 content pieces with GPT4, Mindjourney, and alike. What's hard is to do that ethically.

[lwptoc]

Imagine a world where an omnipotent force could read your mind and predict your every marketing and sales need. It's tempting to believe that artificial intelligence (AI) is that force, revolutionizing the B2B marketing and sales space with unprecedented efficiency and precision. But is this sparkling vision a reality, or just a mirage?

As a trusted advisor in the realm of AI marketing, I invite you on a journey to unveil the overlooked shadows cast by AI tools in the B2B marketing and sales arena. Frankly, AI will be everywhere soon, way beyond just B2B marketing and sales - but that's where my current focus lies.

Together, let's examine the unspoken drawbacks and ask the questions that are seldom asked because you'd get roasted by AI maximalists if you dared to...

The Loss of Human Touch: Are We Sacrificing Empathy for Efficiency?

AI tools may be tireless workhorses, but can they replicate the human touch? I've recently read a post from my friend Thomas Power, referencing how Emotional Labour is probably the last type of labour that humans will do.

Marketing and sales thrive on building relationships, and genuine human connections can make all the difference when closing a deal. I personally don't think a machine learning algorithm can truly capture human empathy and emotion. I do think, however, that AI can supercharge personal branding, marketing, and sales efforts.

Yet, we already see GPT4 mocking it's users - which to me is a clear indicator of at least a spark of humor and emotion. One of my copywriting heroes, Sean Vosler, told GPT4 to not use the phrase "In conclusion" in it's output and see for yourself what GPT4 came up with!

Let's explore the role of the Human Touch in B2B marketing and sales and how AI ethics can help us keep that touch alive.

The Essence of Marketing is Storytelling

All marketing campaigns that drive results use storytelling in some form to invoke emotion. AI can obviously be very provocative, create anxiety, or spark curiosity - depending on your prompts.

Without a clear ruleset in your AI ethics playbook, it's easy to lose yourself in the overly exaggerated AI outputs and go balls to the wall with your storytelling. You'll step way over the fine line that makes storytelling work if you do so.

Here's an example of where GPT4 would have gone vastly beyond what's appropriate:

I recently got mentioned in Daren Smith's "Shine A Light" blog posts where he shares the profiles of 100 creators you should follow if you're building your business. Through his content, Daren is a mentor of mine even though he probably doesn't realize it - so being on this list means a lot to me.

To thank him, I shared my appreciation in a post on LinkedIn.

Follow the link to see my human response with the (hopefully) appropriate level of connectedness and the response I created with GPT4 to play around. You'll see a night and day difference.

Is AI Pirating Content?

"AIs corpus of knowledge is based on the uncredited work of others. To me that's one of the central dilemmas of AI. It's incredibly powerful, but you could argue (for certain uses) that it's intellectual plagiarism on a massive scale."

Glen Long - COO Smart Blogger, Profile

That quote came from a conversation I had with Glen on LinkedIn.

As AI technology continues to advance and become more sophisticated, it is increasingly being used to generate content for various purposes. However, one major concern with this trend is the potential for AI to pirate content and not properly attribute sources.

This issue raises ethical and legal questions surrounding intellectual property rights, fair use, and accountability in the digital age.

I don't think there is a definitive answer on how to treat this dilemma other than being transparent about the use of AI when you're creating content. This is one of the biggest reasons why GPT-4 is so powerful. It taps into a huge array of knowledge and can combine gazillions of data points.

AI-powered content generation systems, like GPT-4, have the capacity to generate human-like text based on the data they have been trained on.

This can result in the unintentional reproduction of copyrighted material or plagiarism without proper attribution - without you even noticing it unless you do extensive research for each content piece you created.

AI Ethics Are Needed To Keep Building Authentic Relationships

I've got friends who write every single email to their customers and coworkers using AI. Is that still genuine communication? Are they using AI as a tool, not unlike you'd use Grammarly or another spell checker? Or are they losing touch with the relationship and handing it to Big Brother?

It's clearly not an easy question to answer.

Let's not forget that the entire world is increasingly becoming more digital. The rollout of CBDCs and tokenization of assets will only accelerate that trend.

That's why I think forging authentic relationships with customers is more important than ever. Although AI tools have their place in customer research, content creation, and data analysis, they're not the end-all-be-all.

It's the genuine connection with another human being that fosters trust, loyalty, and lasting bonds - given you can deliver on your promises. As marketers we must remember that humans crave connection, empathy, and being understood.

Putting these values into your AI Ethics playbook is critical - you'll want to ensure that however you set up your Generative AI strategy, empathy and understanding can't get lost.

Creativity And Intuition: Leveraging Human Insight

You also need strong AI Ethics guidelines around selecting what AI output and analysis to use and what to ignore. While generative AI tools can process more data than humans ever could and uncover hidden patterns, they're also notoriously making wrong conclusions or sharing ideas that would be totally inappropriate to execute.

They don't have the unique spark of human creativity and intuition. It is this creative spirit that AI ethics need to preserve and that pushes the boundaries of exceptional marketing; allowing us to innovate, adapt, and evolve.

Ethical Decision-Making: Guiding Our AI Tools With A Moral Compass

Have you met AutoGPT yet? It's an "experimental open-source application showcasing the capabilities of the GPT-4 language model." It chains together LLM "thoughts" to autonomically achieve whatever goal you set. In short, it runs GPT4 fully autonomously.

When you check in the Github repository, there are 45k stars (developers "liking" this project) but not a single discussion around AI ethics.

We're still VERY early with AutoGPT and we're not going to stop its development no matter what we do. Sooner than later, AI tools will draw their own conclusions. That's what self-driving cars already do - impacting lives along the way...

Tools like AutoGPT, Synthesia, BHuman, or the GPT Plugins make the need for AI Ethics more than obvious.

For example: we'll soon need copyright on voices as it's super easy to replicate everybody's voice nowadays. Even Arnold Schwarzenegger uses AI to turn his emails into a podcast.

We'll also need to be careful with how much access we give AI to our data. Did you see that Hubspot has launched ChatSpot, an AI with access to your entire CRM? That's a ridiculous amount of personal data.

I don't know how to add a moral compass to that development process. Who's the one to judge what is "right" and what is "wrong" behavior? Elon Musk just slammed a BBC reporter on this exact topic. We sure need to find a way to make a somewhat decentralized and democratic decision about how AI Ethics should tackle this. (Yes, I think this is a dream that won't happen)

Data Bias: Are We Teaching Our AI Tools to Perpetuate Prejudices?

Just like a sponge absorbing the contents of its surroundings, AI tools learn from the data they're fed. But what if the data we're using to train our algorithms is biased? Without addressing these biases, we risk creating AI tools that amplify stereotypes and perpetuate prejudices. Are we inadvertently nurturing AI monsters that might ultimately betray our values and intentions?

The challenge that AI Ethics needs to tackle here is the very way AI is trained: the data we feed into the systems. AI learns from the information provided to them, be it text, images, audio or video. If this data contains implicit biases - which is highly likely because what's 100% unbiased?! - the AI will absorb and replicate these biases. We've seen that in 2016 with Microsoft's Tay AI that turned Nazi.

Here's a compilation of his "best" tweets to show what went wrong with Tay. The video was originally posted on YouTube but removed for "violating Hate Speech" - here's a link to the Internet Archive: https://archive.org/details/TheRiseAndDeathOfTayandYou

I don't see a way to remove biases from AI output because humans are biased per se - no matter how you turn it. It's not a nice truth... the only way around this from my perspective is to raise awareness for biases in AI-driven content and decision-making.

Privacy Invasion: Are We Trading Confidentiality for Convenience?

AI tools that excel in targeting and personalization often rely on vast amounts of data, but at what cost? Are we compromising our customers' privacy in our quest for better results? The line between helpful and intrusive can be razor-thin, and crossing it can permanently damage the trust we've worked so hard to build.

Here's what Morten Rand-Hendrikson from LinkedIn Learning had to say about putting proprietary information into ChatGPT:

Many companies around the world are facing a similar situation to Samsung, where their employees inadvertently shared proprietary code with OpenAI by using Chat GPT. The warning applies to any type of data, not just code.

Chat GPT and DALL-E are research projects used to study user interaction with models, and OpenAI retains the right to view data inputted into these tools to improve their services.

If users input company or proprietary information into Chat GPT, they risk leaking sensitive information to a third party.

To avoid this issue, users can either opt for the API option or opt-out of data tracking via a form.

— Morten Rand-Hendrikson. Source.

As businesses increasingly adopt AI tools like ChatGPT, privacy concerns surrounding proprietary data are becoming a pressing issue. When companies input sensitive information into ChatGPT, they may inadvertently expose their intellectual property, confidential strategies, and trade secrets, potentially leading to data leaks or unauthorized access.

One alternative to mitigate these privacy concerns is utilizing self-hosted AI tools like LangChainJS. By processing data locally on users' devices, LangChainJS can significantly reduce the risks associated with transmitting and storing sensitive information on external servers. This client-side approach ensures that proprietary data remains within the organization's control, effectively limiting the possibility of data breaches or misuse.

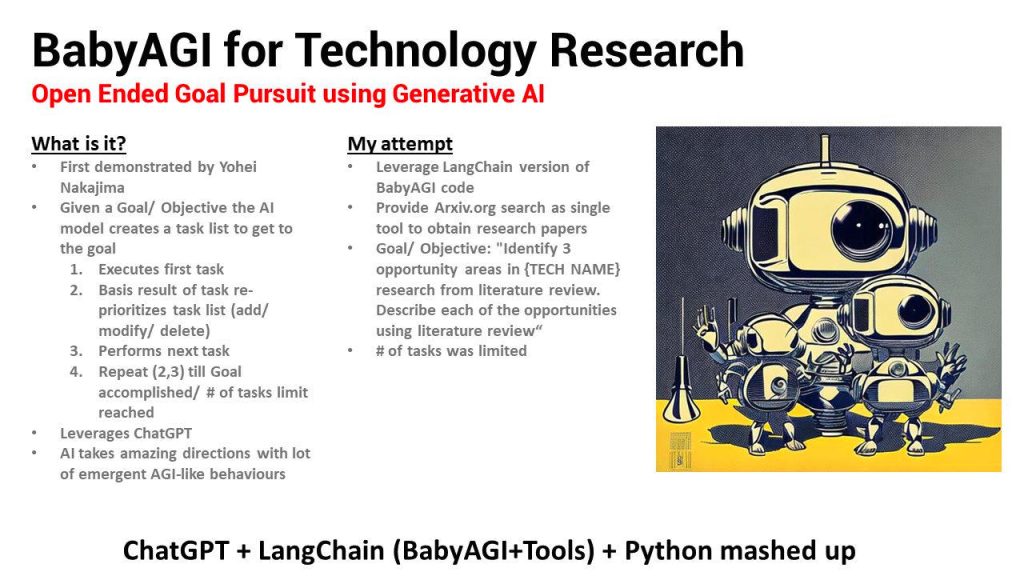

Getting started with LangChainJS is quite easy. It can even lead to implementation of Artificial General Intelligence tools like BabyAGI from Yohei Nakajima.

The Dependence Dilemma: Are We Becoming Slaves to Our Own Creations?

The rapid advancement of AI tools has transformed the way we live and work, streamlining tasks and augmenting human capabilities in a multitude of ways. Worldwide business spending on AI is expected to be $50 billion USD in 2023 and $110 billion USD in 2024 according to the Harvard Gazette.

However, this growing reliance on AI presents a pressing concern for AI Ethics: the dependence dilemma. As we increasingly lean on AI for decision-making and problem-solving, are we becoming slaves to our own creations, risking the loss of our ability to think for ourselves?

"AI not only replicates human biases, it confers on these biases a kind of scientific credibility. It makes it seem that these predictions and judgments have an objective status" - Sandel

— Michael Sandel, political philosopher. Source.

The dependence dilemma raises concerns about how we, as humans, may gradually lose our critical thinking and problem-solving abilities if we delegate all tasks to AI. This overreliance can result in a vicious cycle where our cognitive muscles atrophy as we defer to AI systems for even the simplest of tasks.

In the long run, this dependence may leave us vulnerable to the limitations and biases inherent in AI algorithms, impairing our ability to make informed decisions based on our own unique insights and experiences. We might unlearn how to think critically... that's scary if you ask me.

Furthermore, as AI tools become an integral part of our daily lives, we risk becoming complacent and disconnected from the underlying processes that govern these technologies. This detachment can hinder our ability to recognize when an AI-generated solution may not be the most appropriate or ethical choice, potentially leading to uninformed decision-making and unforeseen consequences.

To address the dependence dilemma, striking a balance between utilizing AI tools and preserving our cognitive skills is crucial.

We must honor the value of our own human intuition, creativity, and empathy, and use AI as a complement to, rather than a replacement for, our own abilities. By fostering a symbiotic relationship with AI, we can harness its power while retaining our capacity for independent thought and critical reasoning.

As we continue to integrate AI into our lives, it is essential to remain vigilant of the dependence dilemma, aware of this challenge in AI Ethics, and maintain a healthy balance. By embracing AI as a tool to augment our skills, rather than allowing it to dominate our decision-making processes, we can preserve our ability to think for ourselves and remain in control of our own destinies.

Ethical Conundrums: Are We Blindly Marching into a Moral Minefield?

As AI continues to reshape the landscape of business and marketing, it is essential to recognize the ethical conundrums that accompany this technological revolution. Business owners and marketers must be vigilant in addressing questions of data ownership, accountability, and transparency, lest we blindly march into a moral minefield. (Hint: that's why I stated publicly that I used GPT4 for parts of this article to streamline the writing).

Humans and machines are very complementary.

— Francesca Rossi, AI ethics global leader at IBM Research. Source.

Looking to the future, the onus is on businesses and marketers to establish guidelines for AI Ethics and practices that govern AI usage. This includes securing customer data and respecting privacy, ensuring AI-driven decisions are fair and unbiased, and promoting transparency in AI algorithms to build trust with stakeholders. As the AI landscape evolves, ethical considerations must remain at the forefront of innovation, shaping the development and deployment of these tools.

Ultimately, the future holds immense potential for businesses and marketers that successfully navigate these ethical conundrums. By committing to responsible AI practices, they can build a foundation of trust, loyalty, and long-term success while mitigating potential pitfalls in this rapidly advancing domain.

In conclusion, while AI tools hold great promise in the B2B marketing and sales space, it's crucial to consider both the glittering prizes and the concealed pitfalls. It's our responsibility to ask the difficult questions and keep a discerning eye on the potential drawbacks. By addressing these issues head-on and making informed choices, we can harness the power of AI while maintaining our integrity, empathy, and humanity.